robots.txt : The ultimate guide to robots.txt

What is a robots.txt file?

A robots.txt file is a text file which is read by search engine spiders and follows a strict syntax. These spiders are also called robots – hence the name – and the syntax of the file is strict simply because it has to be computer-readable. That means there’s no room for error here – something is either 1, or 0.

Also called the “Robots Exclusion Protocol”, the robots.txt file is the result of a consensus among early search engine spider developers. It’s not an official standard set by any standards organization, but all major search engines adhere to it.

What does the robots.txt file do?

humans.txt

Once upon a time, some developers sat down and decided that, since the web is supposed to be for humans, and since robots get a file on a website, the humans who built it should have one, too. So they created the humans.txt standard as a way of letting people know who worked on a website, amongst other things.

Search engines index the web by spidering pages, following links to go from site A to site B to site C and so on. Before a search engine spiders any page on a domain it hasn’t encountered before, it will open that domain’s robots.txt file, which tells the search engine which URLs on that site it’s allowed to index.

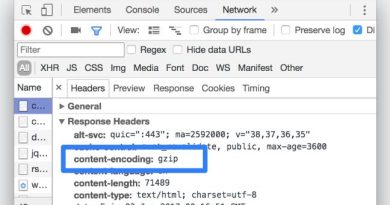

Search engines typically cache the contents of the robots.txt, but will usually refresh it several times a day, so changes will be reflected fairly quickly.

Where should I put my robots.txt file?

The robots.txt file should always be at the root of your domain. So if your domain is www.example.com, it should be found at https://www.example.com/robots.txt.

It’s also very important that your robots.txt file is actually called robots.txt. The name is case sensitive, so get that right or it just won’t work.

Also Read :Complete SEO checklist And How to install google analytics

Pros and cons of using robots.txt

Pro: managing crawl budget

It’s generally understood that a search spider arrives at a website with a pre-determined “allowance” for how many pages it will crawl (or, how much resource/time it’ll spend, based on a site’s authority/size/reputation), and SEOs call this the crawl budget. This means that if you block sections of your site from the search engine spider, you can allow your crawl budget to be used for other sections.

It can sometimes be highly beneficial to block the search engines from crawling problematic sections of your site, especially on sites where a lot of SEO clean-up has to be done. Once you’ve tidied things up, you can let them back in.

A note on blocking query parameters

One situation where crawl budget is particularly important is when your site uses a lot of query string parameters to filter and sort. Let’s say you have 10 different query parameters, each with different values that can be used in any combination. This leads to hundreds if not thousands of possible URLs. Blocking all query parameters from being crawled will help make sure the search engine only spiders your site’s main URLs and won’t go into the enormous trap that you’d otherwise create.

This line blocks all URLs on your site containing a query string:

Disallow: /*?*

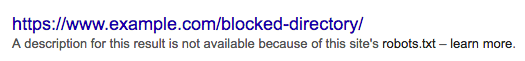

Con: not removing a page from search results

Even though you can use the robots.txt file to tell a spider where it can’t go on your site, you can’t use it tell a search engine which URLs not to show in the search results – in other words, blocking it won’t stop it from being indexed. If the search engine finds enough links to that URL, it will include it, it will just not know what’s on that page. So your result will look like this:

If you want to reliably block a page from showing up in the search results, you need to use a meta robots noindex tag. That means that, in order to find the noindex tag, the search engine has to be able to access that page, so don’t block it with robots.txt.

Noindex directives

It used to be possible to add ‘noindex’ directives in your robots.txt, to remove URLs from Google’s search results, and to avoid these ‘fragments’ showing up. This is no longer supported (and technically, never was).

Con: not spreading link value

If a search engine can’t crawl a page, it can’t spread the link value across the links on that page. When a page is blocked with robots.txt, it’s a dead-end. Any link value which might have flowed to (and through) that page is lost.

robots.txt syntax

WordPress robots.txt

We have an entire article on how best to setup your robots.txt for WordPress. Don’t forget you can edit your site’s robots.txt file in the Yoast SEO Tools → File editor section.

A robots.txt file consists of one or more blocks of directives, each starting with a user-agent line. The “user-agent” is the name of the specific spider it addresses. You can either have one block for all search engines, using a wildcard for the user-agent, or specific blocks for specific search engines. A search engine spider will always pick the block that best matches its name.

These blocks look like this (don’t be scared, we’ll explain below):

User-agent: *

Disallow: /

User-agent: Googlebot

Disallow:

User-agent: bingbot

Disallow: /not-for-bing/

Directives like Allow and Disallow should not be case sensitive, so it’s up to you whether you write them lowercase or capitalize them. The values are case sensitive however, /photo/ is not the same as /Photo/. We like to capitalize directives because it makes the file easier (for humans) to read.

The User-agent directive

The first bit of every block of directives is the user-agent, which identifies a specific spider. The user-agent field is matched against that specific spider’s (usually longer) user-agent, so for instance the most common spider from Google has the following user-agent:

Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)

So if you want to tell this spider what to do, a relatively simple User-agent: Googlebot line will do the trick.

Most search engines have multiple spiders. They will use a specific spider for their normal index, for their ad programs, for images, for videos, etc.

Search engines will always choose the most specific block of directives they can find. Say you have 3 sets of directives: one for *, one for Googlebot and one for Googlebot-News. If a bot comes by whose user-agent is Googlebot-Video, it would follow the Googlebot restrictions. A bot with the user-agent Googlebot-News would use the more specific Googlebot-News directives.

Also read : Seo top keyword reasearch tools And Long tail keywords complete guide

The most common user agents for search engine spiders

Here’s a list of the user-agents you can use in your robots.txt file to match the most commonly used search engines:

| Search engine | Field | User-agent |

|---|---|---|

| Baidu | General | baiduspider |

| Baidu | Images | baiduspider-image |

| Baidu | Mobile | baiduspider-mobile |

| Baidu | News | baiduspider-news |

| Baidu | Video | baiduspider-video |

| Bing | General | bingbot |

| Bing | General | msnbot |

| Bing | Images & Video | msnbot-media |

| Bing | Ads | adidxbot |

| General | Googlebot | |

| Images | Googlebot-Image | |

| Mobile | Googlebot-Mobile | |

| News | Googlebot-News | |

| Video | Googlebot-Video | |

| AdSense | Mediapartners-Google | |

| AdWords | AdsBot-Google | |

| Yahoo! | General | slurp |

| Yandex | General | yandex |

The Disallow directive

The second line in any block of directives is the Disallow line. You can have one or more of these lines, specifying which parts of the site the specified spider can’t access. An empty Disallow line means you’re not disallowing anything, so basically it means that a spider can access all sections of your site.

The example below would block all search engines that “listen” to robots.txt from crawling your site.

User-agent: *

Disallow: /

The example below would, with only one character less, allow all search engines to crawl your entire site.

User-agent: *

Disallow:

The example below would block Google from crawling the Photo directory on your site – and everything in it.

User-agent: googlebot

Disallow: /Photo

This means all the subdirectories of the /Photo directory would also not be spidered. It would not block Google from crawling the /photo directory, as these lines are case sensitive.

This would also block Google from accessing URLs containing /Photo, such as /Photography/.

How to use wildcards/regular expressions

“Officially”, the robots.txt standard doesn’t support regular expressions or wildcards, however, all major search engines do understand it. This means you can use lines like this to block groups of files:

Disallow: /*.php

Disallow: /copyrighted-images/*.jpg

In the example above, * is expanded to whatever filename it matches. Note that the rest of the line is still case sensitive, so the second line above will not block a file called /copyrighted-images/example.JPG from being crawled.

Some search engines, like Google, allow for more complicated regular expressions, but be aware that some search engines might not understand this logic. The most useful feature this adds is the $, which indicates the end of a URL. In the following example you can see what this does:

Disallow: /*.php$

This means /index.php can’t be indexed, but /index.php?p=1 could be. Of course, this is only useful in very specific circumstances and also pretty dangerous: it’s easy to unblock things you didn’t actually want to unblock.

Non-standard robots.txt crawl directives

As well as the Disallow and User-agent directives there are a couple of other crawl directives you can use. These directives are not supported by all search engine crawlers so make sure you’re aware of their limitations.

The Allow directive

While not in the original “specification”, there was talk very early on of an allow directive. Most search engines seem to understand it, and it allows for simple, and very readable directives like this:

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

The only other way of achieving the same result without an allow directive would have been to specifically disallow every single file in the wp-admin folder.

The host directive

Supported by Yandex (and not by Google, despite what some posts say), this directive lets you decide whether you want the search engine to show example.com or www.example.com. Simply specifying it like this does the trick:

host: example.com

But because only Yandex supports the host directive, we wouldn’t advise you to rely on it, especially as it doesn’t allow you to define a scheme (http or https) either. A better solution that works for all search engines would be to 301 redirect the hostnames that you don’t want in the index to the version that you do want. In our case, we redirect www.yoast.com to yoast.com.

The crawl-delay directive

Yahoo!, Bing and Yandex can sometimes be fairly crawl-hungry, but luckily they all respond to the crawl-delay directive, which slows them down. And while these search engines have slightly different ways of reading the directive, the end result is basically the same.

A line like the one below would instruct Yahoo! and Bing to wait 10 seconds after a crawl action, while Yandex would only access your site once in every 10 seconds. It’s a semantic difference, but still interesting to know. Here’s the example crawl-delay line:

crawl-delay: 10

Do take care when using the crawl-delay directive. By setting a crawl delay of 10 seconds you’re only allowing these search engines to access 8,640 pages a day. This might seem plenty for a small site, but on large sites it isn’t very many. On the other hand, if you get next to no traffic from these search engines, it’s a good way to save some bandwidth.

[amazon box=”B08229JZSD” “small”]

The sitemap directive for XML Sitemaps

Using the sitemap directive you can tell search engines – specifically Bing, Yandex and Google – where to find your XML sitemap. You can, of course, also submit your XML sitemaps to each search engine using their respective webmaster tools solutions, and we strongly recommend you do, because search engine webmaster tools programs will give you lots of valuable information about your site. If you don’t want to do that, adding a sitemap line to your robots.txt is a good quick alternative.

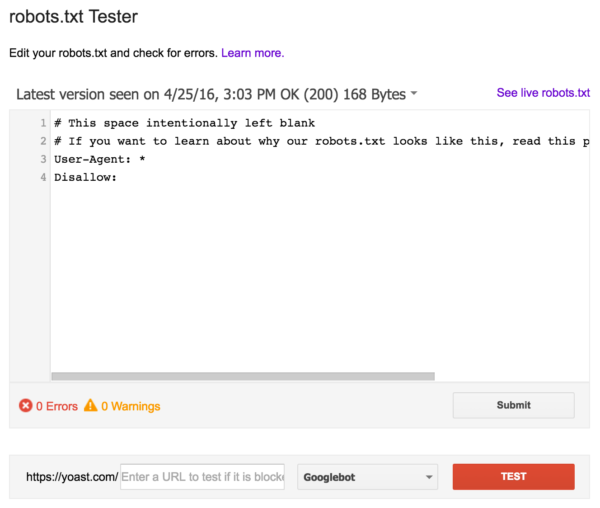

Validate your robots.txt

There are various tools out there that can help you validate your robots.txt, but when it comes to validating crawl directives, we always prefer to go to the source. Google has a robots.txt testing tool in its Google Search Console (under the ‘Old version’ menu) and we’d highly recommend using that:

Be sure to test your changes thoroughly before you put them live! You wouldn’t be the first to accidentally use robots.txt to block your entire site, and to slip into search engine oblivion!

See the code

In July 2019, Google announced that they were making their robots.txt Parser open source. That means that, if you really want to get into the nuts and bolts, you can go and see how their code works (and, even use it yourself, or propose modifications to it).